Optimizing our way through Metroid

People ask me: “why do you let your employees spend so much time playing Nintendo games?” People think we do it for the marketing. People think we do it to have cool demos. People think our blog series on learning autonomous testing concepts via how they come up in games is a pedagogical gimmick and nothing more.

People are totally wrong.

The honest truth, the underlying reality beneath the hype, is that this is actually how we figured this stuff out. None of us were fuzzing or PBT experts coming into this business, and if we were that wouldn’t have helped anyway, because our ambitions quickly went way beyond the state of the art in those fields. So we started asking questions like: “why can’t you beat The Legend of Zelda with a fuzzer,” and pixel by grueling pixel we learned enough to build the Antithesis platform.

The lessons we learn in the Nintendo domain transfer very well to our core business of testing big, complicated distributed systems (if you don’t believe me, I gave a whole talk on that topic). Today’s war story is about one such lesson – the barrier we encountered in a Nintendo game, the new technique it inspired, and how that makes our platform smarter and better at testing everything. Let’s get started!

The case of the red door

Metroid (1986) broke all kinds of ground when it came out – featuring nonlinear, exploration-based gameplay, challenging tactical sequences, and cleverly-hidden secrets. To our knowledge, the game has never been successfully completed by an autonomous system. But Antithesis cruises through most of the game with ease: fighting enemies, gathering power-ups, and slowly filling in the entire map…

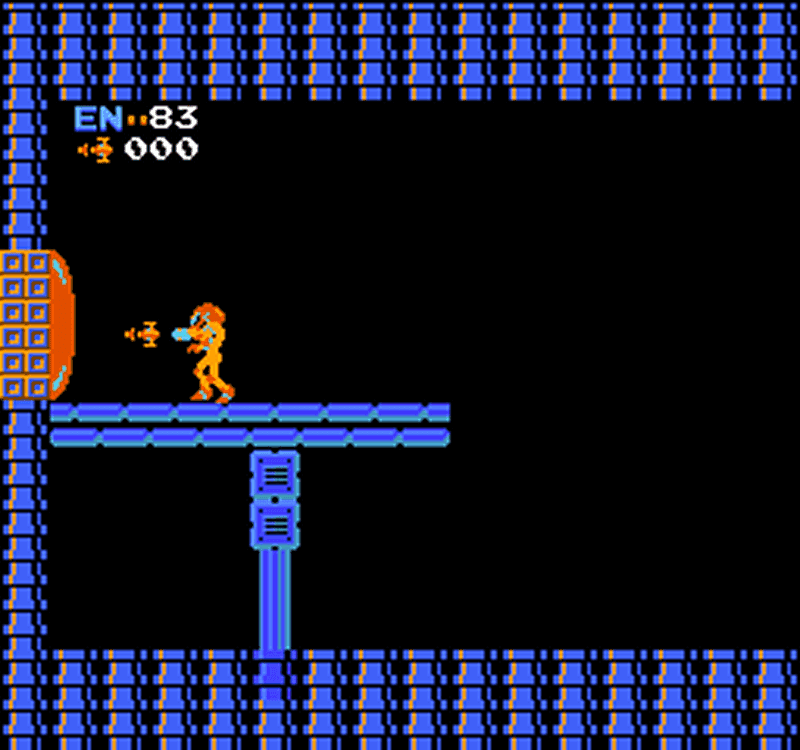

…until it gets to this door:

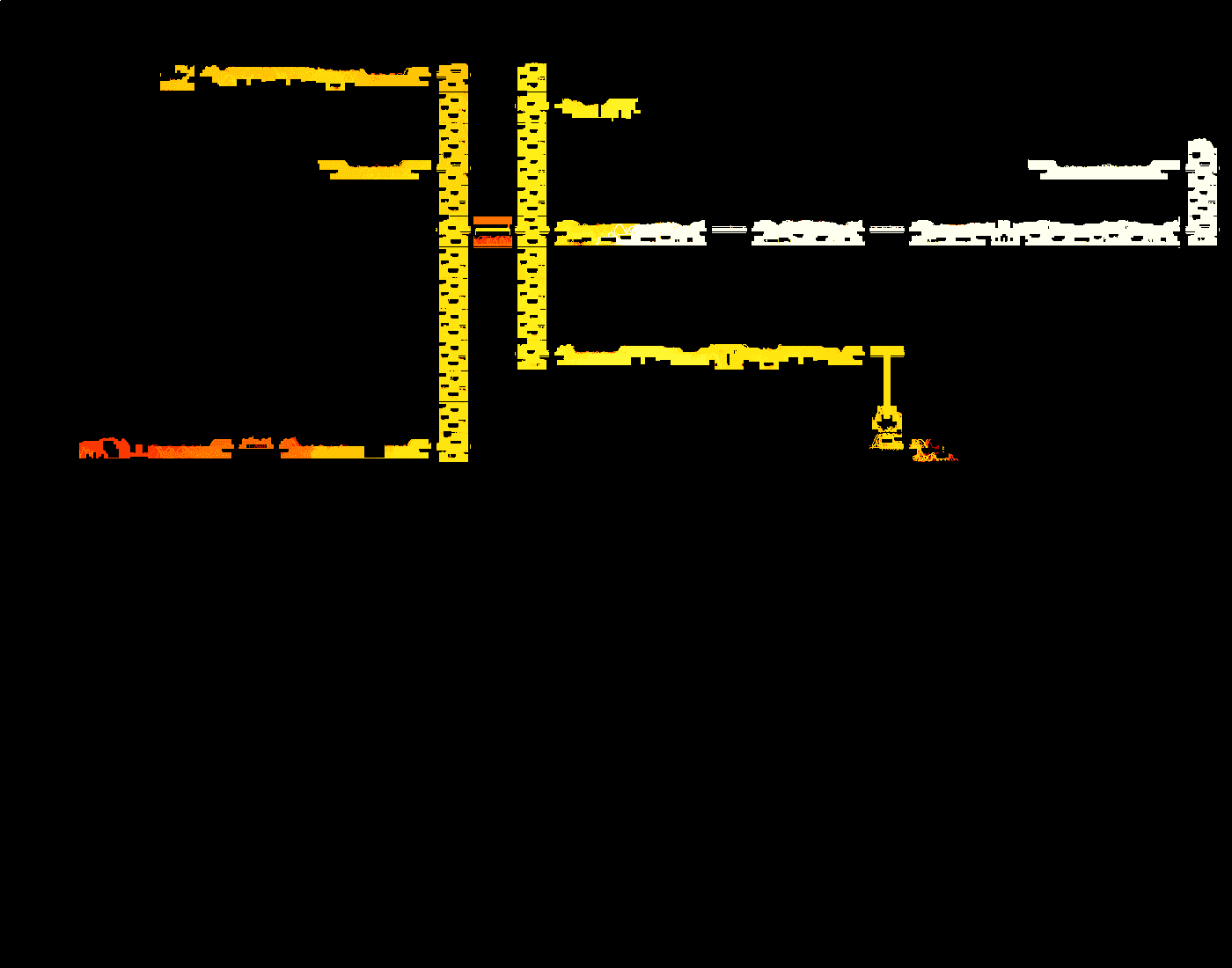

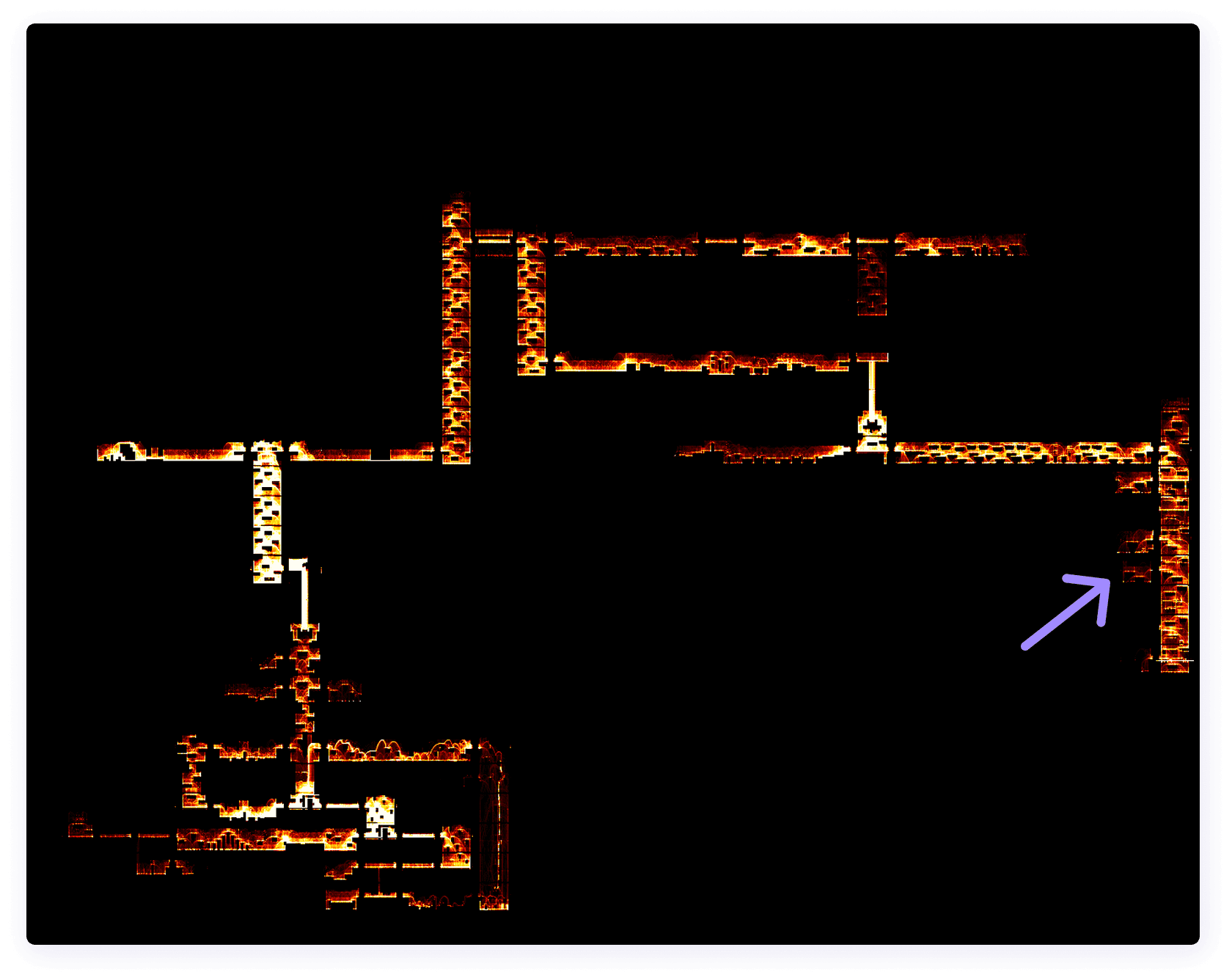

How do we know that that door is a problem? Well, while we’re testing your software, we stream observations of the system under test into our analytic database. In the case of Metroid, some important observations include Samus’s X and Y position on the game map. Then we ask our database very nicely to render a jpeg for us1, and hey presto:

That’s a recognizable subset of the map of the Metroid overworld. Which subset? Why the subset you can visit without going through any red doors like that, and without the bombs (which are behind that red door). So our diagnosis is correct, Antithesis is getting stuck on that door2, but why?

Antithesis allows us to deterministically rematerialize any moment that we encountered in a test campaign, so let’s just pick one of those dots right by the door and see if we can figure out what the problem is. Here’s one such moment, can you see the problem?

I’m guessing those of you with your hands up have played Metroid before! Yes, that’s correct, the problem is that we don’t have any more missiles. Getting through one of those red doors in Metroid requires firing 5 missiles at it. But that’s just a single example of us standing in front of the door. In order to prove that this is the problem, what we really want to ask our database is: “do we ever get close to the red door with 5 or more missiles in our inventory?”

Questions like this are pretty important when trying to determine why your test system is or isn’t finding some bug, so we have a whole product feature to help you ask it. I could show you a heatmap of everywhere we’ve visited with 5+ missiles, but I’ll cut to the chase because it’s basically just this screen (where you first get the missiles):

And there we have the problem. We do pick up the missiles, but we quickly spend them again. And why not? Missiles are a consumable item for a reason, shooting them at enemies makes progress through the game a lot easier, and our testing system quickly figures that out. Heck, quite aside from the fact that they’re useful, our system is one that occasionally takes random actions. So it’s exponentially unlikely that we will avoid ever pressing the button that fires the missiles.3

A brief message from our sponsors

And now an aside (and an advertisement): while this kind of analysis of why your testing system has gotten “stuck” is very important, it’s also very hard. Our viewpoint is that it’s unreasonable for every team out there that’s practicing PBT or fuzzing or DST or whatever to reinvent the wheel and learn how to get good at state space exploration. Moreso than making your system deterministic, making it “smart” is a giant barrier to adoption of these techniques, and it’s one we aim to eliminate by solving these problems once and for all in a generic way. If that sounds appealing to you, get in touch!

A few bad ideas

Okay, back to the NES game! How could we encourage Antithesis to hoard its missiles and not spam them for no reason? Well the fundamental approach we were taking to Metroid was very similar to how we beat Zelda, using something analogous to the SOMETIMES_EACH method in the Antithesis SDK. To recap, SOMETIMES_EACH is a kind of sometimes assertion that takes a tuple of values, and equalizes exploration for each unique value of the tuple that’s been seen. In the case of Metroid, the thing we were feeding to that tuple was a (suitably discretized) representation of Samus’s position on the game map.

One possible solution would be to just add “number of missiles” into the tuple that we’re feeding to SOMETIMES_EACH, this would ensure we explore every corner of the map with all possible numbers of missiles, which would in fact guarantee that at some point we stand in front of the door with 5 of them. Unfortunately, that approach doesn’t work very well – the set of distinct {x, y, m} tuples is huge, which both puts memory pressure on various parts of our system, and means we don’t spend very much compute energy on any particular value. It’s also completely unscalable, because what do you do when you encounter the next value you care about, for instance Samus’s health? The more dimensions you add to the tuple grid, the slower the whole system gets.

Another idea would be to say: “once you’ve gotten the missiles, only accept states of the game where Samus is carrying 5 or more missiles”. We could probably rig up some kind of weird assertion that guaranteed that. But it’s also a bad solution, in an opposite way to the previous approach. This requirement is overly restrictive – we don’t actually mind using our missiles to get past some tricky enemy, provided that we eventually collect them again. And what do we do about areas, for example the very area we’re trying to get to, that are impossible to reach without using the missiles? Surely we’d rather visit them with no missiles than not visit them at all.

No, as is often the case in API design, what we really want here is just to say what we want, dammit, and let the system figure out how to give it to us. What we really want is: “explore all the {x, y} tuples, but all things being equal, prefer states with more missiles to fewer.” And it seems like there’s an obvious implementation too: along with each of the tuples, track the number of missiles we had when we discovered it. If we see a new tuple, add it to our list of representatives. If we see a new state that fits in an existing {x,y} bucket, compare the number of missiles, and choose it as our new representative if we have more. Sounds straightforward, but there’s just one problem: it’s much too slow.

How to win friends and optimize things

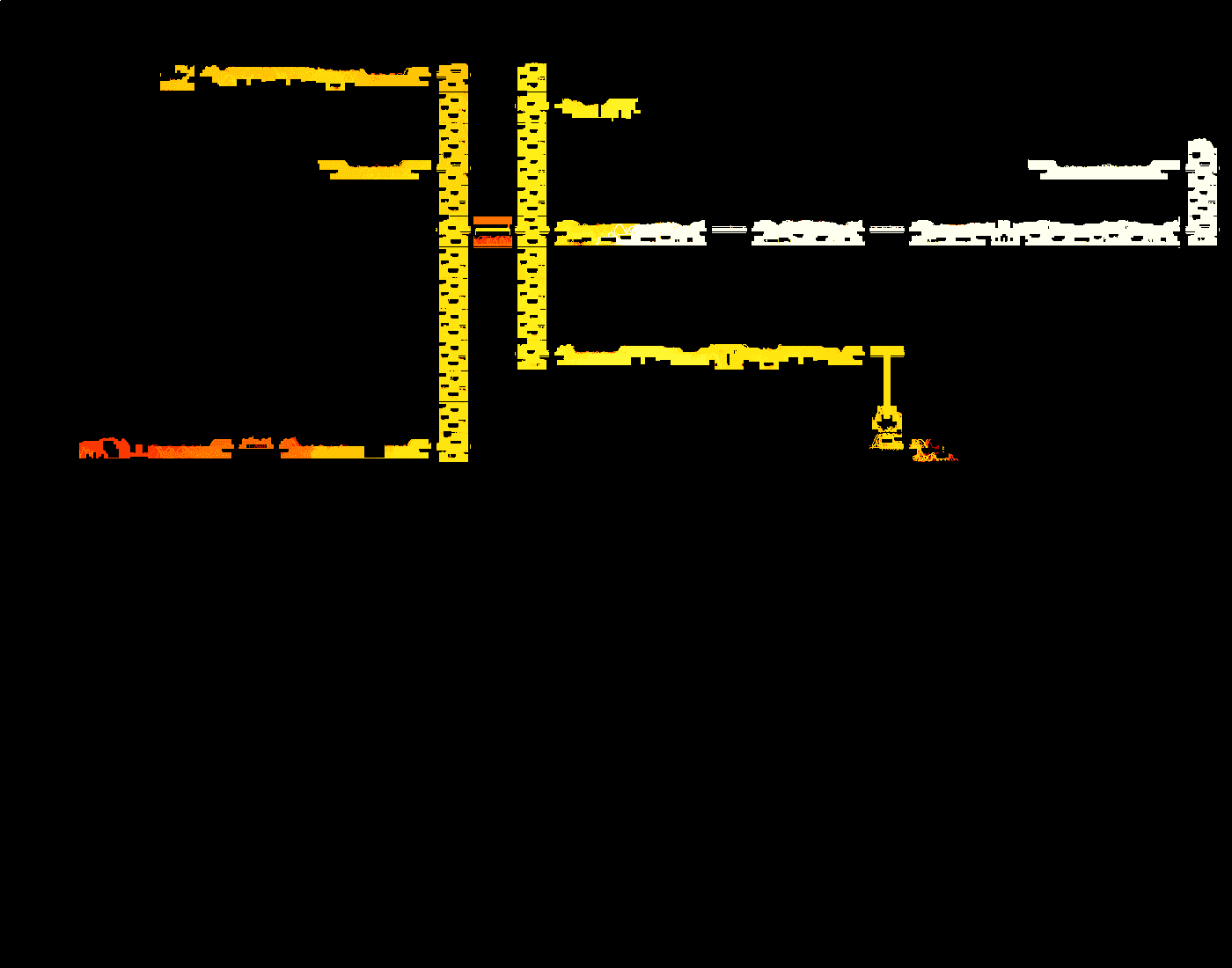

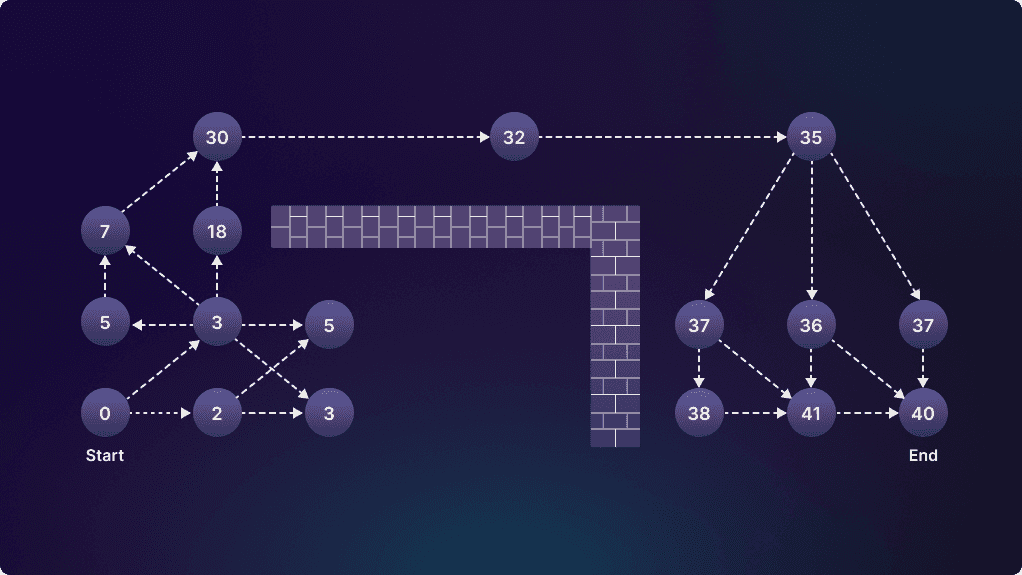

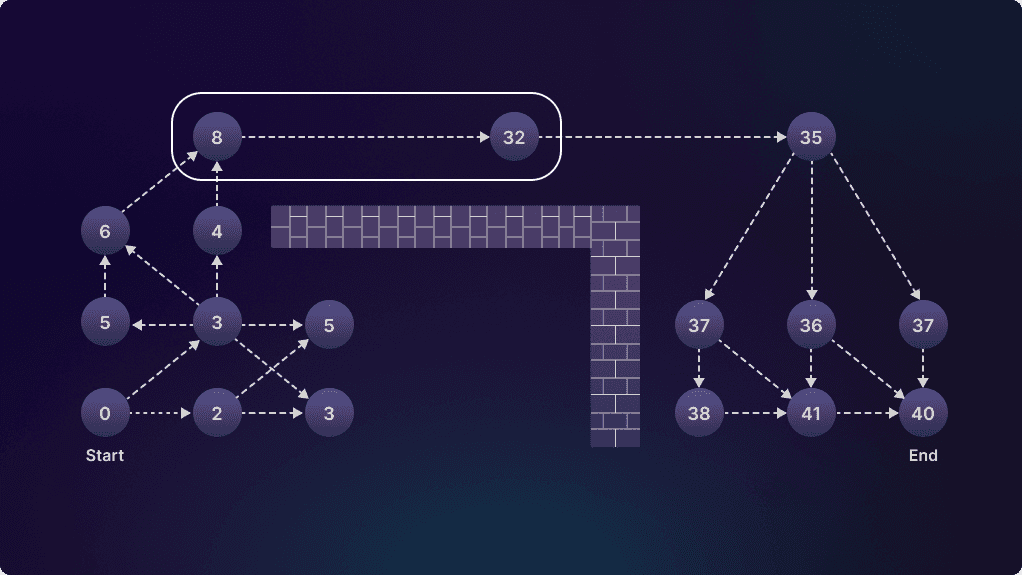

Asymptotically slow, that is. To see why, let’s consider a simpler game, like Mario, where the only quantity that really matters4 given your position on a level is the amount of time left on the clock. The state transition diagram below is a simplified model of a Mario level – the circles are “positions” on the Mario map, the arrows show when it’s possible to get from one position to another, and the numbers are the fastest time yet that we’ve been able to get to that position. For simplicity, assume that it takes one second to traverse each of the arrows.

Clearly there is a lot of room for improvement here – in theory it should be possible for us to reach the end in just 7 seconds rather than 40! But the naive algorithm will take way too long to figure that out. If we had perfect knowledge, we would want to focus our optimization efforts on the particular area of the game highlighted below, where we take a whopping 27 seconds to enter the bottleneck that gets us around that wall. The purely random approach outlined in the previous section will waste a lot of time optimizing areas of the game that don’t matter.

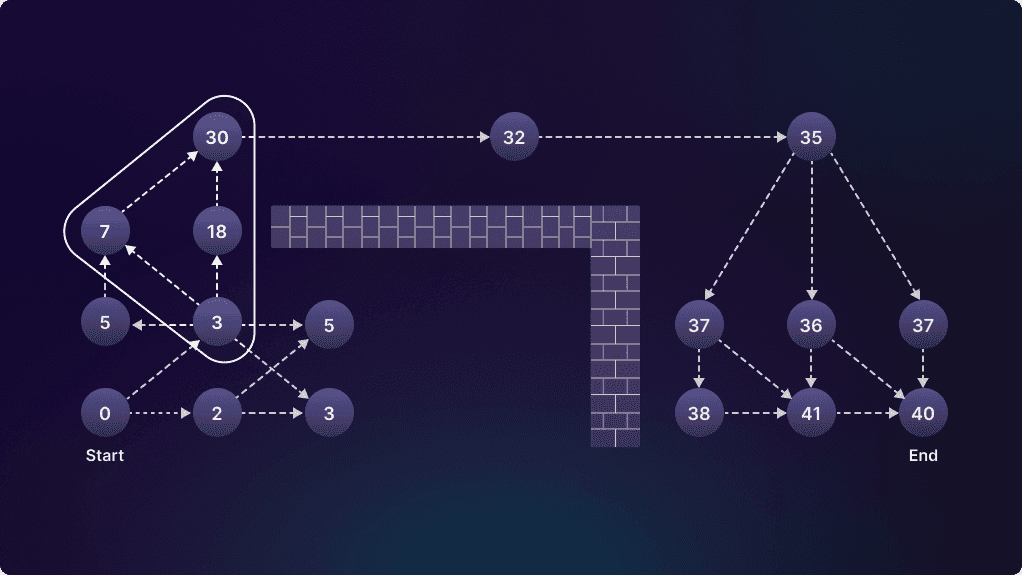

But there’s another, more fundamental problem. Suppose that we poured a bunch of energy into optimizing that set of transitions, and found a faster way to the location that it previously took us 30 seconds to get to. We now need to propagate that improvement through the rest of the level, like this:

But doing this using the naive algorithm is actually quadratic in the number of distinct game states we discover. That’s really not okay. But getting asymptotically faster requires us to maintain a connectivity graph of all the system states, and to remember what the best known transition costs are. And there’s one additional wrinkle: the Antithesis system is very good at state space exploration, which means it’s continuously discovering weird shortcuts. For example, what if we learned how to clip through this wall?5

In general, the topology of the state space under exploration may change drastically over the course of a testing run. The data structures required to do all of this bookkeeping with a constantly updating set of locations, and make smart decisions about where to invest optimization energy, all while keeping lightning-fast performance in the fuzzer (we really don’t want “policy” to ever be the bottleneck, the goal is to always keep the hypervisor saturated), are not trivial. But boy do they make a difference. Here are two videos of routes through the first level of Mario – the first is with a naive exploration strategy like you’d get from SOMETIMES_EACH, the second is with optimization turned on. See if you can spot the difference.

If you’re a fuzzing or PBT expert, you may think this all sounds awfully familiar. Yes, it’s highly analogous to test case shrinking/minimization! The two ways in which it’s more general are: (1) we can optimize for things besides length of repro, and (2) we’re optimizing continuously during the exploration process, not just when a crasher or counterexample is found.

Breaking out of Brinstar

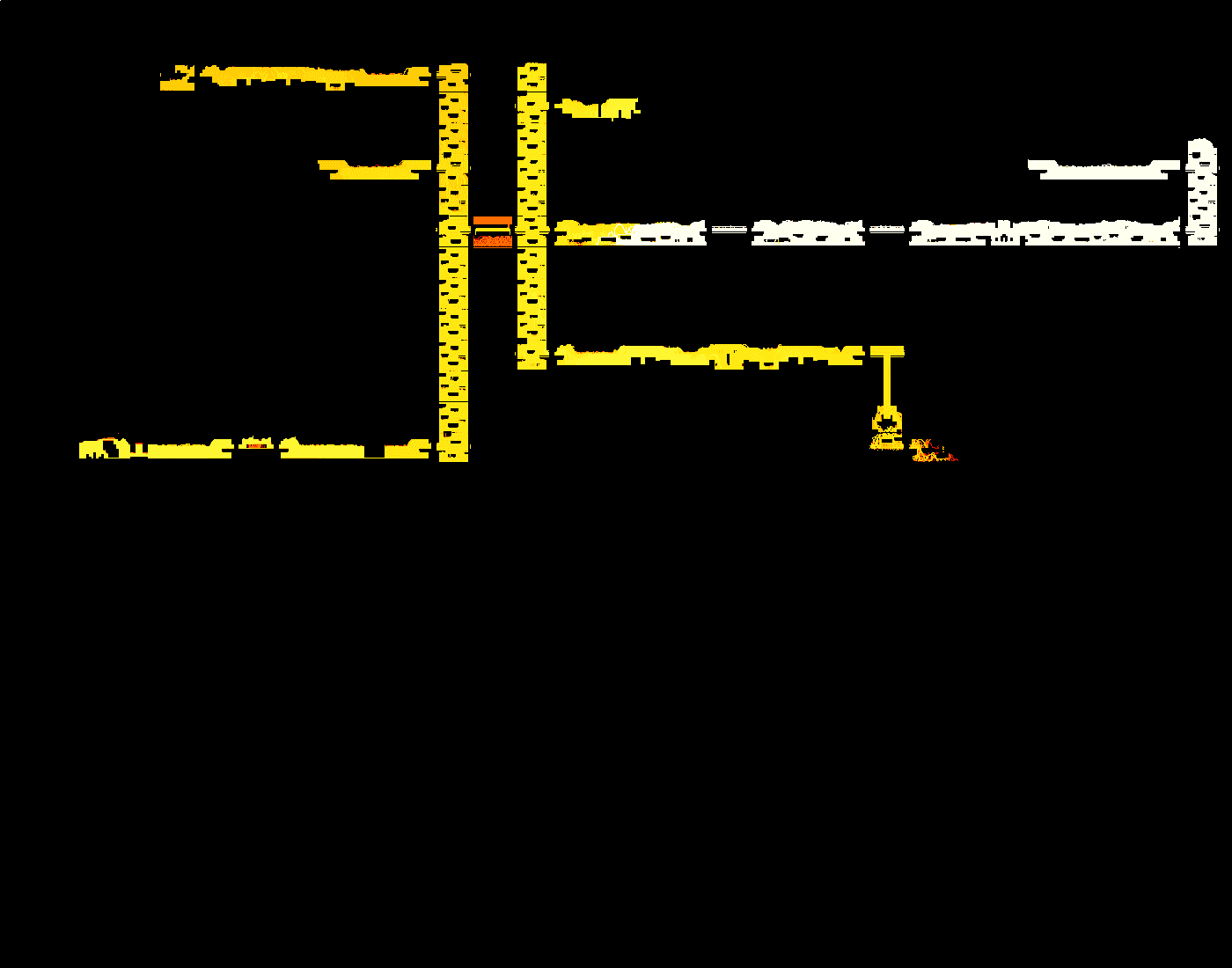

Let’s go back to Metroid. Once we enable our new algorithm, we get very pleasing images of optimization waves sloshing through the game as we explore. For example, that image you saw at the top of this post was color-coded by health (brighter is better). Once we enable optimization, we quickly go from that picture:

To this one a few minutes later:

See how it’s pushed the higher health region into the area in the bottom left? An unanticipated benefit of enabling optimization is that we actually explore the game much faster. On reflection, this makes total sense – it’s easier to play the game when you have more health! And then, we flip the switch to add in optimization for missiles and… immediately we get the bombs and away we go.

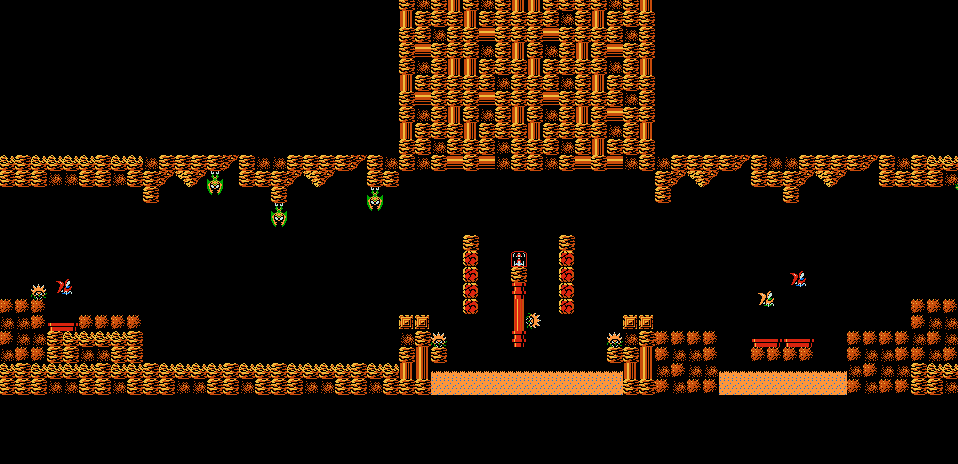

In fact, with that one change we begin destroying this game. The Antithesis platform quickly ferrets out the game’s bosses, and once told to optimize for lower boss health slaughters them in short order (with lots of abuse of i-frames):

The optimization policy also produces some eerily human-seeming behavior. For example, it learns that it can “farm” health and missiles anywhere that there’s an endless stream of relatively weak enemies:6

Fuzzers love guessing passwords – here’s an outtake of it deciding to start the game with some major powerups (we didn’t keep this run, that would be cheating!):

And naturally… it finds lots of bugs. Here we are using the favorite speedrunner technique of bomb-jumping to sequence break in Kraid’s lair:

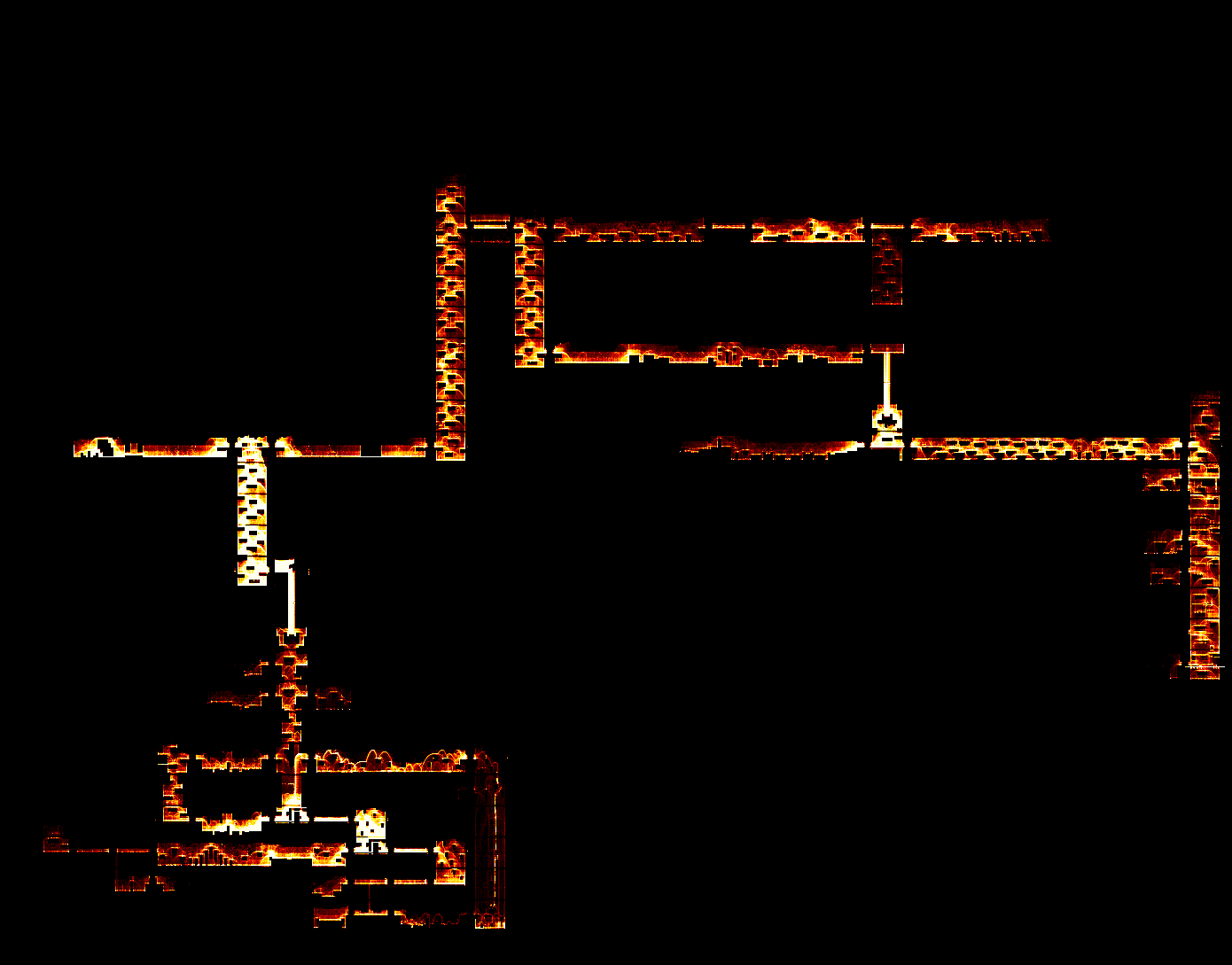

Some bugs we can spot just by looking at another jpeg of where we get. Can you spot the bug?

Here’s a hint, this room does not exist:

Yes, we are able to glitch through walls and go out of bounds more or less at will.

And, of course, we defeat Mother Brain and beat the game. Here’s a full (sped-up) video of that:

Optimization is everywhere

Metroid was the most taxing Nintendo game beaten by Antithesis so far, and forced us to invent multiple new state-space exploration techniques, chief among them the capability to fuzz a program while dragging along an orthogonal optimization objective. But if you think about it, this capability is core to what a fuzzer needs to do. Want to look for memory leaks while fuzzing an API? That’s optimization! Looking for hangs or other performance anomalies in a distributed system? Fuzz with optimization! Trying to find an input that causes some buffer to overflow? Optimization may do the trick!

This was the hardest game for us to beat, but every game taught us something new, and we have not yet begun to tell you all the lessons we learned from this diverse and challenging problem domain. Stay tuned for more posts in this series!