Your computer can test better than you (and that's a good thing)

If enough marketing dollars go behind promoting a buzzword without a formal definition, it begins to lose all meaning. Ask ten developers about NoSQL, and you’ll hear eleven interpretations. Request a definition of “transactions” from database vendors, and you’ll find yourself navigating a maze of nuanced explanations. In software, clarity is often the rarest commodity.

So when I say “autonomous testing,” what comes to mind? AI-generated unit tests? I hope not. Our defense against AI code generation? For all our sakes, I hope so. Before the industry renders this buzzword as meaningless as most other software terms, let’s look closely at it and establish a clear definition.

The first two things I want to understand when learning about a new software concept are what it is and why I should care. At some point between the what and why I want to develop a clear mental model of how this concept applies to me as a developer delivering software to the real world. So, we’ll take a look at both today.

When tests write themselves

While there are plenty of ways to test software – unit testing, regression testing, smoke testing – most of these ways involve developers attempting to predict scenarios that their software will face in production. You want your software to be production-ready.

Imagine a world where your computer can generate tests that match the dynamism and unpredictability of your toughest production issues. No more spending countless hours crafting test cases by hand, trying to anticipate every possible edge case. Instead of this tedious and error-prone manual process, your computer intelligently generates tests that catch the kinds of unexpected issues that keep developers up at night. That’s what we’re talking about here. Yet we still need a clear definition that leaves no room for ambiguity.

Autonomous testing is the approach of having a computer continuously generate tests that are validated against an oracle.

Let’s break this down:

- Tests are generated by computers, not humans

- Results are validated against an oracle that determines correctness

These two requirements are inseparable. Your software cannot be computationally tractable without an oracle (such as TLA+, OpenAPI, Proto files, Jepsen Models, assertion statements, etc.) that defines its capabilities and limitations. Without such information, a computer cannot understand your intended software behaviors. Consequently, any generated tests are restricted to catching generic bugs that are universally undesirable – like server crashes, panics, deadlocks, and memory leaks. However, they cannot validate your business logic – the unique aspects of your software that serve your specific use case. Only you know how your software should and should not behave.

This might raise the question: “How do I know if I’m doing autonomous testing right?” Well, here are a few telltale signs:

- Your code coverage increases the longer your computer generates tests, spending compute time instead of human time

- You uncover surprising edge cases that even the most creative developer wouldn’t have thought to test for

- Your generated tests are less affected by code changes, eliminating the usual headache of updating test suites after every small code change

- You can confidently deploy any day of the week (even Friday) without fear of blowing through your error budget

Great, your computer can test better than you. But how does this approach to testing fit into today’s software delivery practices? Why is this really a good thing for me as a developer? For that, let’s shift gears and explore how autonomous testing rearranges the canonical DevOps cycle that underpins most of software delivery today.

The great DevOps reboot

Testing is a huge part of delivering software, often measured through frameworks like DORA. These frameworks track many metrics that are generally desired for delivering software effectively:

- Deployment frequency: How often organizations successfully release to production

- Lead time for changes: The amount of time it takes for a commit to make it to production

- Change failure rate: The percentage of deployments causing failures in production

- Time to service restoration: The time it takes to restore the service to a working state

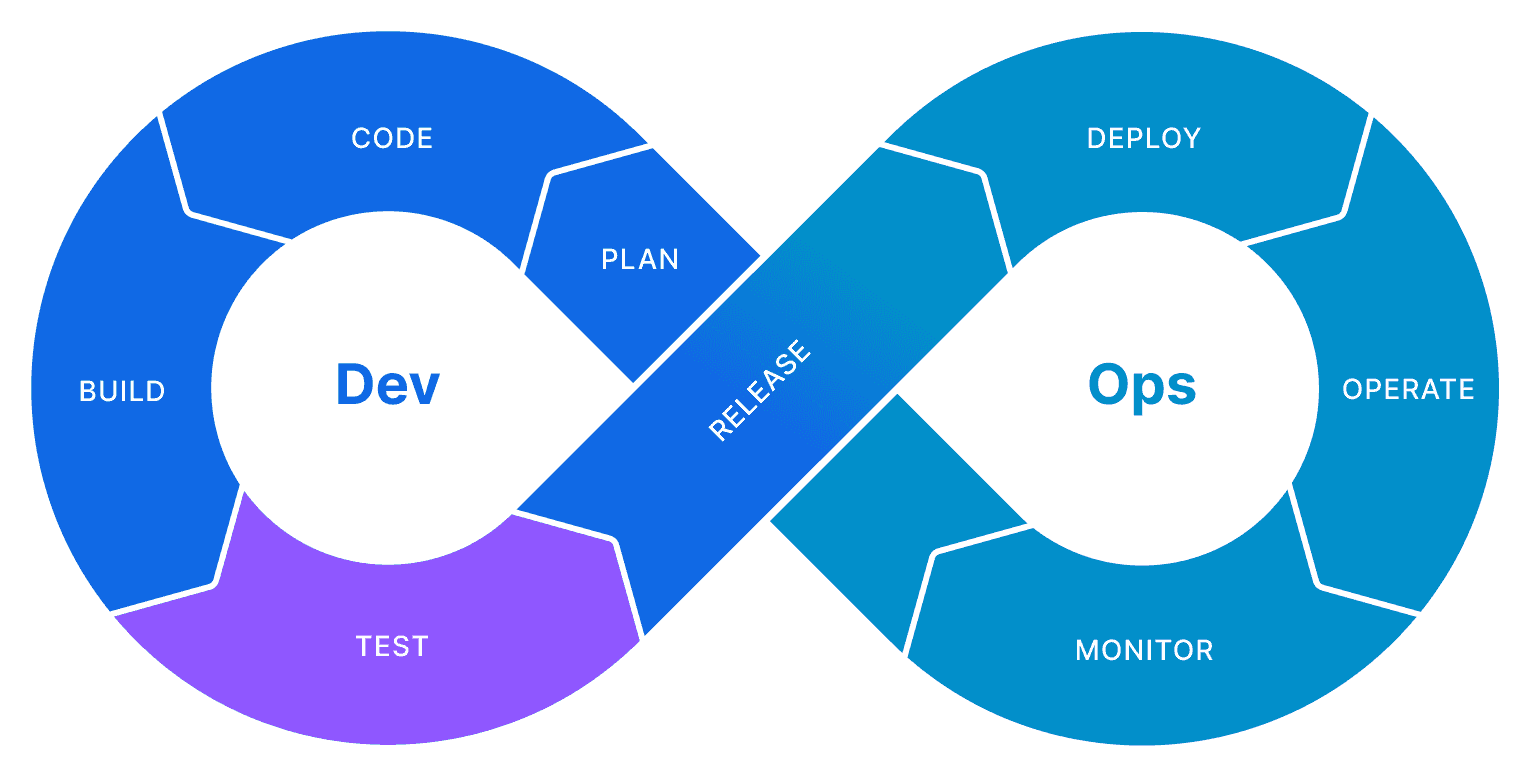

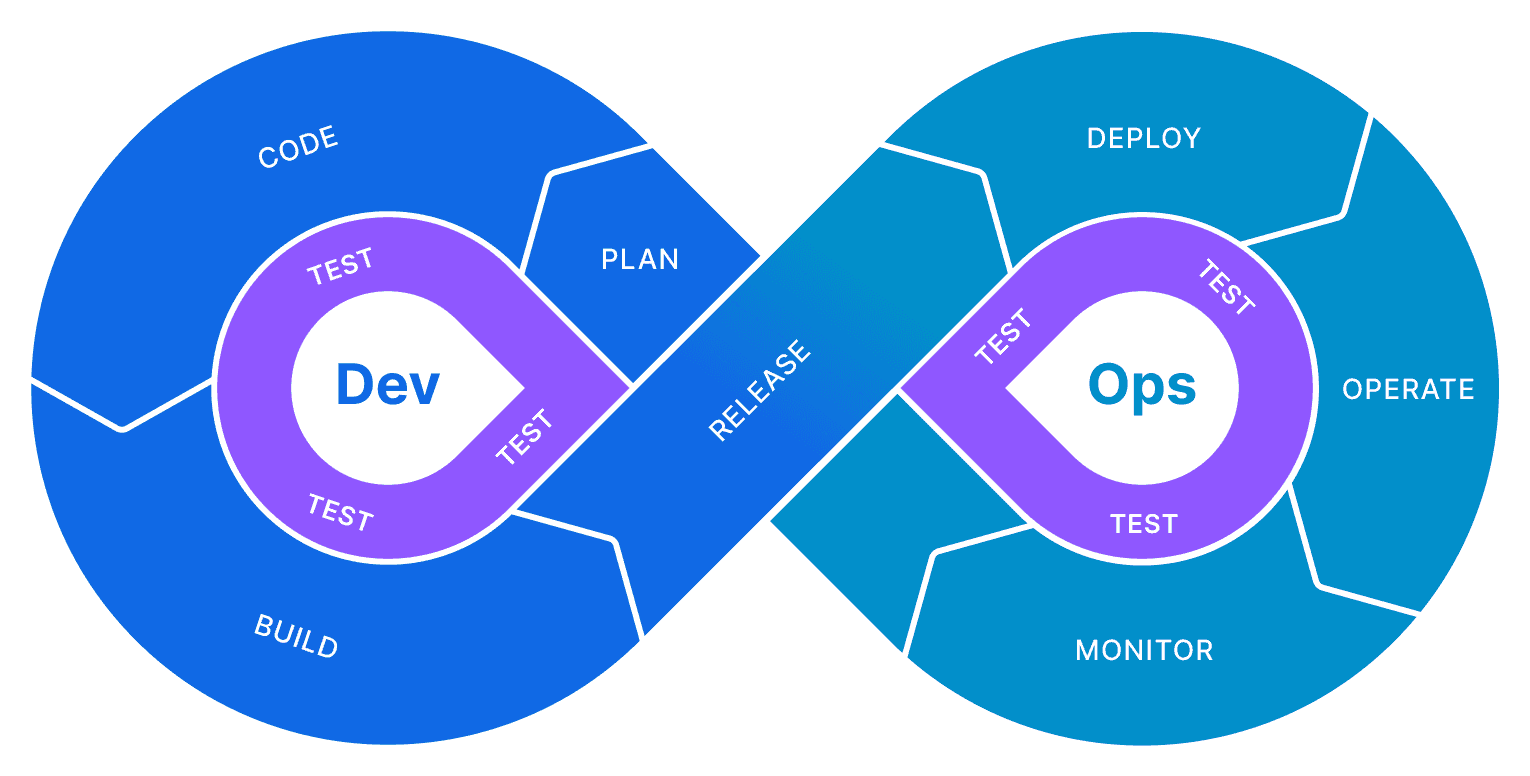

To be able to move these metrics in a positive direction, engineering teams build a flavor of the DevOps cycle visualized below that works for them:

But here’s an uncomfortable truth: depicting testing as a single phase in the DevOps cycle is fundamentally wrong. While most engineering presentations show a neat cycle, like the one above, with testing as one discrete step, the reality is far more complex and interleaved.

Testing isn’t a phase – it’s a continuous activity that permeates every stage of software delivery:

- Code: Run unit tests to verify individual components behave correctly in isolation

- Build: Run a lot more tests such as integration and static analysis tests to ensure components work together seamlessly

- Release: Run even more tests such as comprehensive End-to-End tests that validate the whole system

- Deploy: Run health checks that confirm your system behaves as expected as it rolls out

- Operate & Monitor: Run observability tools that act as continuous tests in production, alerting you to any deviations from expected behavior

Each testing method serves a distinct purpose, with costs, scope, and duration varying significantly across the different tests.

Rather than introducing autonomous testing as yet another testing method, think of it as a configurable alternative to traditional testing – one that adapts to and enhances testing throughout the delivery cycle. Traditional tests operate within fixed parameters – running for a set time or through a predetermined sequence of operations. Autonomous testing, on the other hand, is much more flexible. It’s like adjusting a dial:

- Quick sanity check: A couple of minutes of autonomous testing to catch obvious issues

- Thorough validation: Several hours of testing for extensive behavioral testing across diverse scenarios

- Full system verification: Run tests overnight for maximum coverage

The key thing here is that autonomous testing breaks free from the binary “pass/fail these scenarios” model. Instead, it delivers progressively stronger guarantees the longer you let it run. The depth of testing becomes a conscious trade-off you can tune based on your needs and phase in the DevOps cycle, rather than being constrained by pre-written test cases.

Hope-driven development

This flexibility from autonomous testing addresses a fundamental weakness in traditional DevOps practices: our reliance on hope as a strategy. Think about it – we hope our unit tests cover all possible cases, hope our dependencies will remain stable, hope our integration tests account for every way customers might use the product. We even hope that when things go wrong, we’ll have enough telemetry data to reproduce and debug issues. And ultimately, we hope our teams can afford to spend countless hours hunting down issues in production.

Hope is not a strategy. Everything that can go wrong in production will eventually go wrong in production, it is just a matter of time.

Instead of hoping we’ve thought of everything, with autonomous testing, we let computers systematically explore the possibilities, validating against explicit business rules we define. With this approach, we can continuously uncover those expensive unknown unknowns before they manifest as very known production incidents.

Now what?

In software, sometimes (many times) words can lose their meaning, becoming hollow shells of promise without substance. But autonomous testing stands apart. If you’ve made it this far, you understand that this isn’t just another tool in our development arsenal – it’s a paradigm shift in how we approach software delivery.

If you’re interested in learning more about autonomous testing, contact us! We’re all big yappers here.