Crushing Castlevania with Antithesis

What’s the hardest part of the original NES Castlevania?

Death? Antithesis one-shots him.

Dracula? He was easy once we had a ROM-map to tell which phase he was in (byte $007A, by the way).

That stupid corridor with all the gorgon heads? Didn’t even register.

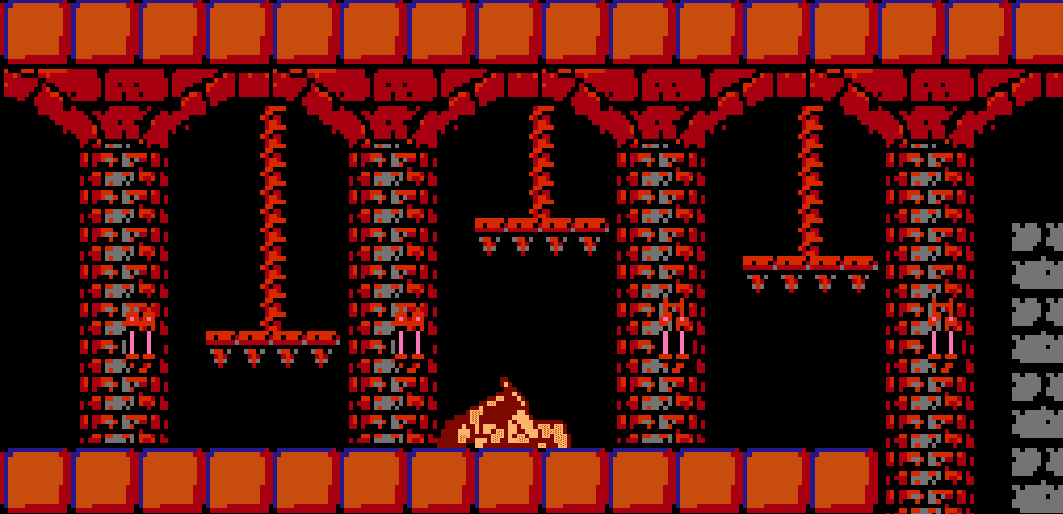

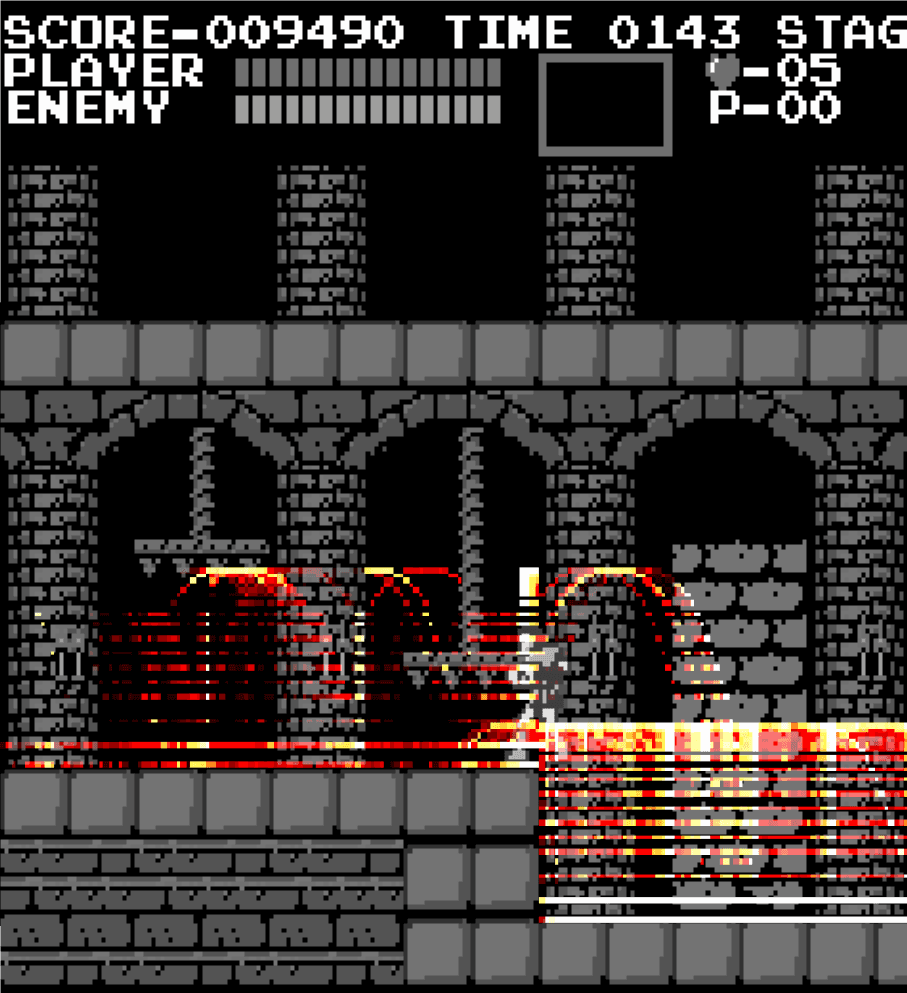

No, for us the hardest part is Stage 6’s stompers1. Three alternating crushers a 5-year old could intuitively understand and probably get through.

We spent an unbelievable amount of exploration time trying to find a way past the trio of stompers, but the same configuration that had blitzed through the first five stages was utterly stuck here. What could be going wrong? Read on to learn the answer, and for broader lessons on how to get good test coverage for any software system, even ones that aren’t about battling the undead.

This, by the way, is the third installment in a series of posts showing how Antithesis explores classic 8-bit NES games, each of which holds an extraordinarily complex state space. When Antithesis isn’t playing NES games, it’s helping developers track down hard-to-find bugs in distributed systems. (But if you just want to see it playing NES games, skip to near the end for a video of our full victorious playthrough of Castlevania.)

Snouty’s quest

A quick refresher on Castlevania:

Castlevania2 is a 1986 platformer for the Nintendo Entertainment System (NES), in which the player guides Simon Belmont, a whip-cracking vampire hunter who takes his fashion cues from Conan the Barbarian, through Dracula’s castle.3 The game is less open than Zelda, but more complex than Mario,4 and rivals those masterworks in influence.

And a quick refresher on Antithesis:

The heart of Antithesis is a deterministic hypervisor, which lets any program be run in a controllable, rewindable way so all bugs are reproducible. There’s also a guidance component that intelligently seeks out and explores system states that seem likely to lead to interesting behavior.

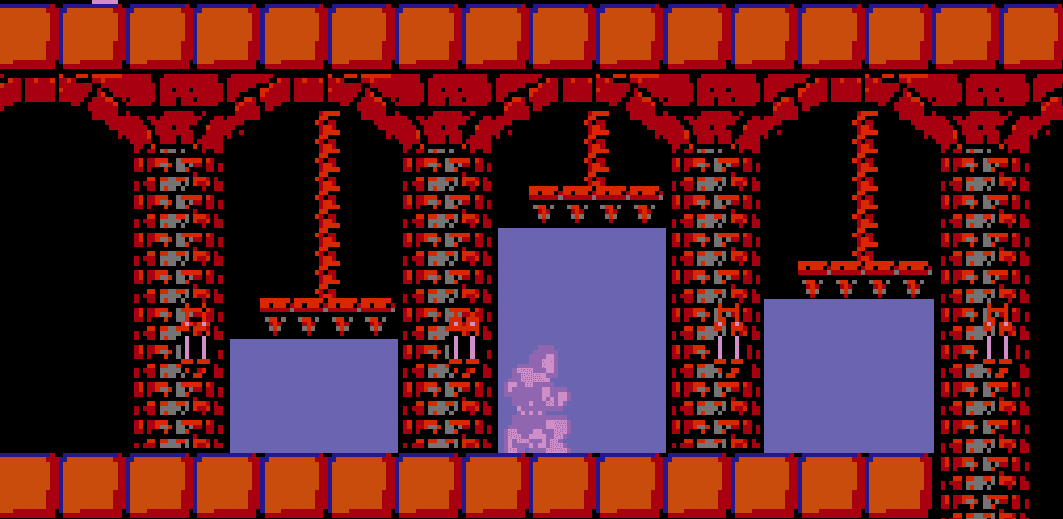

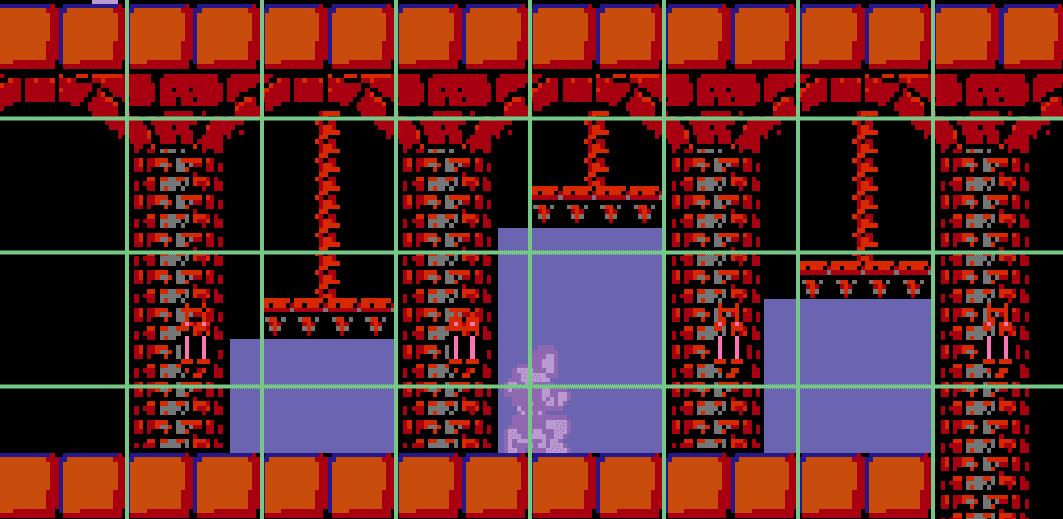

How does this work for Castlevania? In this case, we’re searching that vast space for an exceedingly rare yet possible state of the system: “You win!”. Just like for Zelda, we can make use of SOMETIMES_EACH assertions to explore Castlevania.5 Here, we’re interested in traversing all the stages of the game, so our guiding assertion will be SOMETIMES_EACH({SimonX/32, SimonY/32, stageID}). In effect, we’re bucketing the game’s map into a 32-pixel square grid and turning the platform loose to discover it all.

But as we explore the game, we will likely find a tremendous number of system states that all get lumped together into a single bucket, so many that it’s impractical to save them all. This is analogous to how a traditional, coverage-guided fuzzer may find many input sequences that lead to a particular line of code running, but can’t save all of them in its corpus. When Antithesis encounters the same assertion many times, it will score the various states that hit that assertion and then only use a few of them as a basis for future exploration.6

Our SOMETIMES_EACH assertion guides Antithesis by providing a system state to start from, choosing an exemplar from an underexplored bucket to ensure that our exploration effort will be focused on the horizon of progress. Once we have a state Antithesis thinks might be interesting, it adds a few random bytes to the input string that got it there – effectively, it mashes buttons on the NES controller. It feeds all new states encountered through our assertions, then returns to SOMETIMES_EACH for the next cycle of this process.

This strategy drives Antithesis to fully explore the Cartesian space of Castlevania, and in doing so the system cruises past enemies and boss fights with ease.

And then we get to the stompers.

This got our attention: we don’t want to get stuck like this. But finding our way past was a great illustration of how Antithesis can work through barriers in real software.

Curse of squashedness

The first step to solving a problem is admitting that you have one. It’s not uncommon for fuzzers and other autonomous testing systems to blast through most of a program, but then suddenly run into an insurmountable barrier (checksums and hash functions are notorious for this). But this problem can totally happen in conventional testing too! Have you ever made a change to your program that kept all your tests passing, but the reason they kept passing is that they were no longer doing anything?

The traditional solution to this problem is to keep an eye on your branch coverage, and to assume that something has gone wrong if it suddenly takes a dive. Antithesis offers a more powerful alternative to branch coverage, called “sometimes” assertions, that allow you to spell out specific situations that you want to be sure your tests are reaching. But in the case of Castlevania, both of those approaches were overkill, because we could just draw heatmaps of where in the game the system was getting to, and zoom in on the giant pile of corpses.

Our growing stack of Belmont patties was a classic example of how even a sophisticated search algorithm can nonetheless get incorrigibly stuck. The system was trying to find new buckets to explore, but to reach new ones, it had to pass through a bottleneck of a few grid cells under the stompers. And if we didn’t get very lucky, the exemplar inputs in that area of the map would be ones where Simon faced certain doom.

If Simon is standing under a descending stomper (or even an insufficiently ascending one), there might be literally no string of inputs that takes him to safety. Yet his health is fine, so Antithesis considers this a perfectly acceptable state from which to start all exploration from this bucket. We would almost never make our way through the gauntlet of stompers without establishing a doomed exemplar, and because I was using a naive optimizer,7 we could never escape our doom.

Sooner or later, Antithesis would get stuck starting every exploration of the stomper area from a doomed state. Our guiding SOMETIMES_EACH assertion couldn’t progress in the game because no matter what it did it couldn’t find any new buckets to explore. Essentially, the system ran out of ideas.

But we sure weren’t out of ideas…

Dracula, X, Y, stage #</

When an autonomous testing system is stuck on some barrier in a program you’re trying to test, there are two main approaches to overcoming the problem:

- Improve your input distribution, the process by which you generate the inputs to feed into the system

- Become more sensitive about output interpretation, finding new sources of signal to help you hill-climb to a solution.

Is there any way to make our input distribution more random? Mashing buttons at random sure seems pretty random! …Well, kind of. The NES controller is just one means of several to affect what happens in our game. If we added new sorts of inputs beyond pressing combinations of the eight buttons on the controller, such as swapping game cartridges, we would add new dimensions to the randomness of our inputs.8 But most of these exotic inputs would be actively harmful, and adding them to the system’s repertoire would lead to it wasting time instead of playing to win the game. In a real system, though, sometimes adding entirely new types of inputs is exactly what’s needed to produce a certain interesting behavior.

Conversely, making your inputs less random can also help. Reducing the randomness of your inputs can actually produce more randomness in the outputs!9 For instance, Simon could choose from button presses assembled into sensible actions like “crouch” or “jump right”, instead of also from among incoherent inputs like smashing all four directional controls at once. At the extreme, this devolves into finding a sequence of inputs that plays through the game from start to victory, and having that be the only input sequence the system would ever try (basically turning your autonomous test back into a boring scripted end-to-end test with all the brittleness that implies). But there’s a useful middle ground that gets exploited by things like grammar-based fuzzers and our own fault injector.

Perhaps we could have gotten past the stompers with a more clever input distribution, but it seemed in this case that refining output interpretation was the better approach.

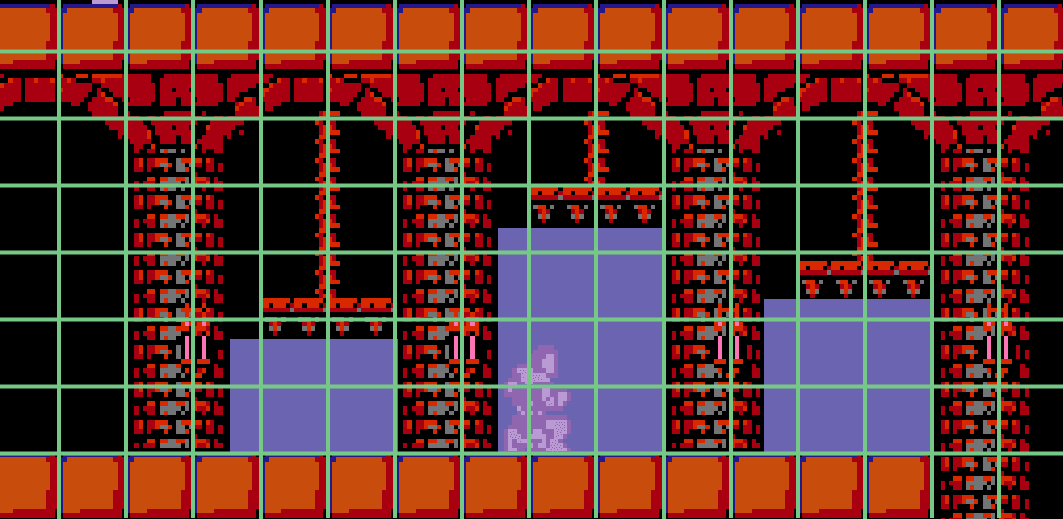

First, we revisited the resolution of the grid created by our SOMETIMES_EACH cells. What we had was a holdover from our work on Mario, where we used 32-pixel cells. But with 32-pixel cells, there are no cells wholly within the safe zones between the stompers. Moreover, the danger zone of those cells is the rightmost edge, where Simon first enters them and which therefore would be chosen as exemplars (because we were naively optimizing on time remaining in the level).

By substituting svelte size-16 Simon-scale cells, we could guarantee a cell with a non-doomed exemplar after each stomper. As a result, we didn’t have to pass through the full trio of stompers in one monumentally unlikely input sequence; we could split up the work. This is exactly analogous to how a conventional coverage-guided fuzzer incrementally makes its way through successive stages of a parser.

But we were still spending a large amount of effort exploring from doomed states, and adjusting the grid’s resolution wasn’t going to fix this.10

We needed our SOMETIMES_EACH to focus on the parts of Castlevania’s memory relevant to the task at hand. If we had been trying to find bugs in Castlevania’s sound system, we would choose to pay attention to bytes like $00D8 (Square Wave 2 SFX Halt). For beating the game, we had instead chosen to care about bytes like $003F (Simon Y-Coordinate) and $0028 (Stage Number), but the system’s blindness to the stompers was crushing our hopes. So we added another parameter: stomper position.11

This meant that standing in the same position under a stomper when it’s too late to escape was no longer considered to be in the same bucket as standing under it in a window of safety, so we would reliably have non-doomed buckets to explore from even under the stompers. For programs you’re testing on Antithesis, which probably aren’t on the NES, you can use logging or assertions to distinguish states.

At long last, we got past the blockage. From here, our standard configuration was easily up to the task of fighting its way through the rest of the game. We defeated Dracula, and saved the day!12

Legacy of debugging

Suppose that your day job is not about delivering the world from the scourge of Dracula. What does this story tell you about using Antithesis to slay bugs that aren’t susceptible to wooden stakes and garlic? (Although a judicious splash of holy water will replace most computer problems with a different problem.)

It’s not uncommon to run into barriers like this when testing real code. As mentioned, checksums and hash functions are the traditional bane of fuzzer authors, but much like a vampire that transforms into a bat, fuzzing barriers can take much subtler and more innocuous forms. But as we saw here with the stompers, Antithesis has the tools to work its way past parts of a program that require a substantially different approach.

First, know that you’re getting stuck, either with traditional branch coverage, logging, or “sometimes” assertions. If you use our SDK, Antithesis will use this information to guide its exploration and can vanquish many obstacles itself. You can even deliberately introduce synthetic bugs to make sure your tests are strong enough to find your bugs.

Second, ensure that you’re exercising your system in a way that makes it sometimes do the kinds of things that get you to the areas of interest in your program. It might be that the bug’s preconditions are statistically impossible to meet for some sneaky reason. You may need to expand the input distribution by adding new types of inputs to the workload, or to reduce hardcoding in the workload so Antithesis can try combinations of inputs you would never think to try. Other times, you know perfectly well what elaborate sequences of inputs are needed to proceed, so make scripted workload steps that provide exactly those inputs.

Lastly, you can add logging or assertions that help us to distinguish important states. We have an SDK for that. Oh, and if you haven’t enabled coverage instrumentation for your program, you probably should. Those fuzzer people actually got this one right.

Our goal is to explore everything that your system can do, and to that end everything else is just an input. If we can steer our way through a challenge as notoriously difficult as old-school NES games, we can also find our way to the gnarliest bugs in customer software. Having fun playing games yields serious insights for all our users.