In the labyrinth of unknown unknowns

A Quality Assurance (QA) engineer walks into a bar. Orders a beer. Orders 0 beers. Orders 99999999999 beers. Orders an anteater. Orders -1 beers. Orders an anteater in a beer. Orders a ueicbksjdhd. The first real customer walks in and asks for a light. The bar bursts into flames, killing everyone.

This old joke illustrates a fundamental challenge in testing software: testing is limited by the developer’s knowledge and imagination. How do you test for the unknown unknowns, scenarios you can’t even imagine? Even the best developers can’t think of everything. Would 8 test cases have saved everyone at the bar? How about 100?

As the fire in the bar demonstrates, catastrophic failures in software are rarely caused by (expected) interactions. We’ve all already seen examples in the wild, like the Heartbleed bug which affected the OpenSSL library, so we all know they’re out there – but how do we uncover them?

Blind spots lurking in your software

Donald Rumsfeld borrowed the term “unknown unknowns” from the Johari Window, a tool developed for psychologists in 1955. Slightly adapted, the framing is still a useful tool for thinking about software testing.

| Known | Unknown | |

|---|---|---|

| Known | Usage scenarios we are aware of and understand because we tested them. | Usage scenarios we are aware of but haven't tested yet, so we don't fully understand their behavior. |

| Unknown | Usage scenarios we assume we understand but haven't explicitly tested them. | Usage scenarios we are neither aware of nor understand, therefore never tested them. |

It’s this last category that our unfortunate QA engineer in the bar failed to acknowledge. You don’t know what you don’t know and therefore don’t test it. Instead a user finds it for you, and catches on fire.

What could our QA engineer have done differently?

A hero’s quest for unknown unknowns

In many ways, searching for unknown unknowns in software is like playing an open-world video game. Let’s revive our QA engineer and give them one more chance. This time to test a video game. A video game set in a vast, unexplored city. There may be an ancient artifact hidden in this sprawling network of streets and structures. Or there may not be. There may be more than one. Our hero doesn’t know. How should they approach this challenge? Do they wander aimlessly, hoping to stumble upon their artifact? Or can they do so strategically, as a seasoned adventurer would?

Choosing a search strategy

In game design terms, our strategy needs to solve several classic challenges:

- The Path Explosion Problem: This is a city with countless intersecting streets. How do we ensure we’ve explored every possible route?

- The Diversifying Guidance Problem: How do we avoid getting stuck in one neighborhood, repeatedly checking the same houses?

One way to approach this is to do what our QA engineer did at the bar and use an example-based testing approach. This is analogous to making a list of all the well-known landmarks and popular spots and visiting each one, hoping to find an artifact. As we already observed, this approach is limited by the tester’s imagination, and we need to overcome that constraint.

One way to do this is to develop a list of (characteristics) that might indicate the presence of an artifact, rather than a list of places. For instance, “older than 500 years,” “has gargolyes,” or “near a water source.” Our QA engineer can then program our avatar to explore on its own, paying special attention to locations with these characteristics. This is analogous to using property-based testing (PBT) to generate and execute tests without human intervention. PBT will cover a broader range of possibilities faster than manual, example-based testing, but the city is still vast, and we know there may not actually be any artifacts to find. At the same time, we know we can at least try to explore every part of the city – we can try to explore well. How do we do this?

Testing with random choices: the novice adventurer’s approach

One naive way to solve this problem is to… well, act like a complete novice. Imagine our QA engineer mashing buttons on the controller, forcing the avatar to stumble around the city, taking random turns at every intersection, and walking into any random building it could find.

We might eventually find an artifact, or several, but it’s incredibly inefficient. In testing terms, using completely uncorrelated random choices is like making independent decisions at every point, without considering the past or the context of our search. Given a fixed time budget (or our QA engineer gets tired), very few valid choices will be generated. We’ll check only a tiny fraction of the city before giving up.

You might think, “That sucks!” And you’d be right. With this approach, many rare bugs will be hidden well enough that your tests won’t find them, yet poorly enough that your users will stumble upon them. Not a great situation for our QA engineer’s reputation!

Testing with hard coded knowledge: the guided tour approach

Maybe we know that any artifacts in the city will be hard to reach. Maybe they’re at the end of a maddening maze, maybe they’re guarded by a monster, or maybe they’re in a misty, waist-deep river filled with who knows what. To increase the probability of generating valid search choices (and finding an artifact), the choice at every point should not be made randomly, but according to a guide. This is like being given a partial map of the city, some written clues about artifact locations, and a big gun before the search begins. All this lets us reach more places than previously possible.

In testing terms, we’re moving from pure randomness to guided exploration. This approach is the first step towards generating a diverse set of valid test cases by using information about the software under test (SUT) to direct the generation.

But, with this approach, your testing is brittle, depending on hard coded knowledge that runs the risks of quickly becoming outdated as your software changes. If you’re not careful enough, you could even update your software and not even notice that it stopped working. You’d be left with outdated clues that might lead your avatar to indefinitely search near water sources, even if the location of the artifact had updated to be near a forest. We can do better.

Testing with rewards: the adaptive adventurer

To do better, we can borrow ideas from reinforcement learning. Imagine if our QA engineer not only follows clues given at the beginning of the search but learns from each exploration, updating their strategy in a constant, automated feedback loop.

This dynamic approach allows us to not only guide our tests to produce a variety of valid choices, but to have those choices continuously learn from every previous choice, just as our adaptive adventurer explores the city in an increasingly intelligent manner from the clues collected.

Running our search strategy: solo quest or party adventure?

Now we have an intelligent adventurer who learns while exploring. But can we do better? Yes. There is one last piece we haven’t thought about yet. Are we going on this quest alone? Running our tests sequentially is like sending a single adventurer to explore the entire city. It’s straightforward, but might take a while. Why send one adventurer when you can dispatch an entire party? By parallelizing our tests, we can take advantage of cheap, scalable compute resources. It’s like having multiple characters explore different parts of the city simultaneously, sharing information as they go!

QA engineer reenters the bar

The most dangerous bugs are the ones you don’t even know to look for. Dealing with these bugs requires that our QA engineer relies on strategies that can intelligently and quickly explore the vast universe of possibilities better than a human mind can conceive.

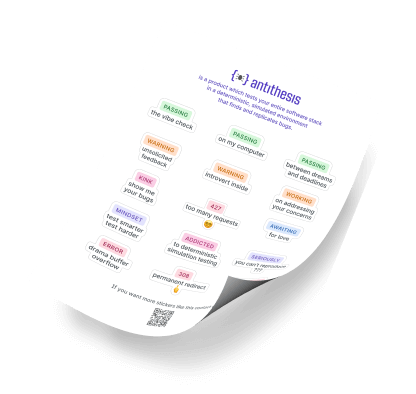

That’s why we built a platform that autonomously searches software for bugs, especially unknown unknowns. It tests in parallel usage scenarios that developers are highly unlikely to have considered, finding issues before users do.

If you too are not interested in your software being the digital equivalent of a bar bursting into flames, killing everyone – contact us to try out our platform!