Rolling for our new initiative: Test Composer

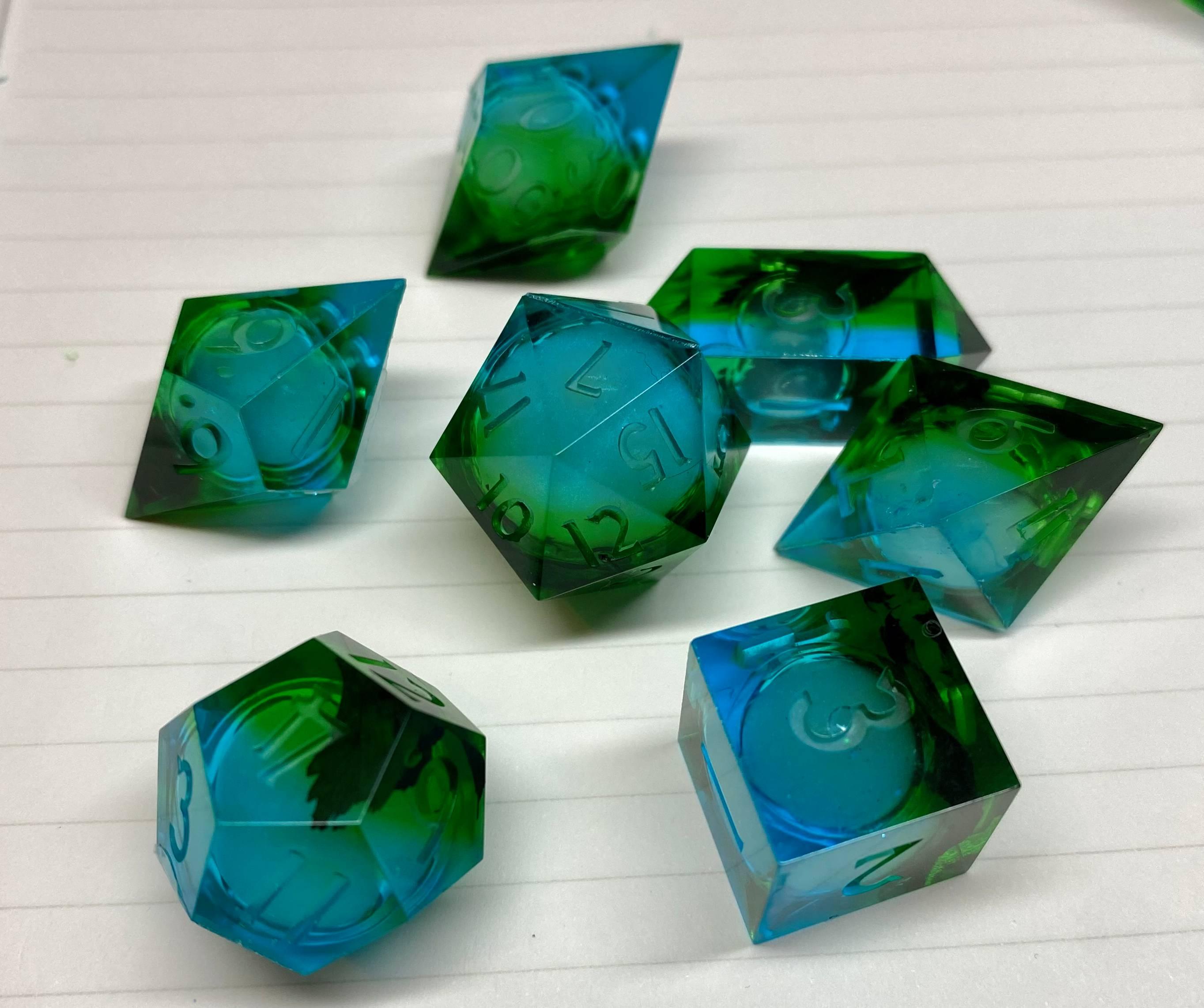

Having good tests can save time and energy in a variety of cases, not only in software development. A while ago, I got really into dice making, and started making sets for my friends. I got one request for special ‘liquid core’ dice. To make them, you inject a mix of liquids and sparkly mica powder into a tiny glass sphere, seal the sphere, then add it to a die mold with epoxy resin. The first time, I waited for a few days – in vain – for the dice to fully harden. I repeated the process again. And again. But still no luck. What was happening?

Maybe I wasn’t mixing a perfect 1:1 ratio of epoxy resin to hardener. Or perhaps the liquid from the orbs was leaking? Eventually, I tried making a set with a completely different resin, and lo and behold, the dice hardened perfectly. Had I tested the resin by itself, tried a different resin sooner, or better – regularly tested the resins so I’d know if they’d gone bad – I wouldn’t have been left with 3 sets of upsettingly squishy dice.

I found it hard to think of and implement every useful test case when making dice – just as it is when testing software. Antithesis tries to make this easier with powerful autonomous software testing. However, one area where we still need active work from users is on the test templates that exercise the software by generating sequences of unique tests, and validating that work on the Antithesis Platform. Writing good test templates is actually one of the most important (and currently most neglected) things users can do to ensure success (read: find interesting, hard to find bugs).

There are some pitfalls we see people encounter as they come up to speed on Antithesis, so I’ve been working on a way to make writing test templates easier. I’ll talk about that later in this post, but first, let’s go through some of the best practices I mentioned and the reasoning behind them, to demonstrate how we think about tests.

Building a test template from scratch

Let’s take a hypothetical engineer. They want to test a pretty simple API with just two functions, a() and b(). Perhaps our engineer has skimmed the documentation, and has a vague grasp of our recommendations. They know that the more randomness they incorporate into their test, the more our exploration can branch. That’s because wherever their test uses randomness to choose what to do, the Antithesis platform is able to explore branches of the multiverse where a different random value was provided, and thus a different behavior is exhibited.1 So maybe now our engineer’s test looks like this:

import random

def choose_function():

return random.choice([a,b])

def test():

for i in range(100):

func = choose_function()

func()Now their test is set to generate random length-100 combinations of our API calls – they’re all set, right?

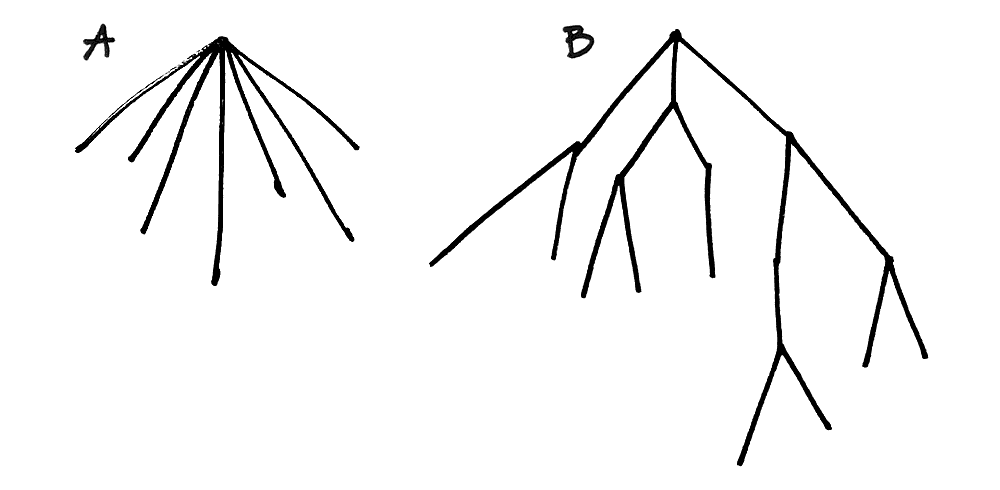

Not exactly. Here, our engineer is using a built-in PRNG abstraction (Python’s random module) initialized at runtime, not drawing from the Antithesis-provided entropy source. This means that due to the deterministic nature of the hypervisor all this software runs in, on every branch Antithesis explores, the ‘random’ numbers generated would always be the same. We’d have to restart this program from scratch in order to get a new seed, and therefore a new random sequence to test. The resulting testing tree would end up looking a bit like example (A), always starting to test from the root. The benefits I mentioned in the last paragraph only take effect when using Antithesis’s own entropy source.

What if our engineer replaced the contents of their choose_function() with a call to the Antithesis SDK?2

def choose_function():

return antithesis.random.choose([a,b])

Now Antithesis is actually able to feed the random values it wants to, influencing our engineer’s test, and branching out into different histories where it wants. This enables greater exploration at a lower cost, as seen in example (B), since the Antithesis platform doesn’t have to go back and redo a bunch of work each time it wants to try a new branch.

In general, it’s helpful to remember that our engineer doesn’t need to run through every test in Antithesis. Instead, they only need to make it possible to run any test. That’s because as long as there’s a chance of running any one of their tests, our system will be able to “change history” to explore that possibility if it finds that interesting.

On top of that, our engineer can modify their test() function to loop forever instead of to 100, since Antithesis can just choose to stop running and prune away uninteresting branches. Now they can let their tests run forever with no issues, because the Antithesis platform will take care of stopping them.

def test():

while True:

func = choose_function()

func()Our engineer has definitely made some progress towards trying various random combinations, but wait! Chances are, if they’re using Antithesis, their software has some degree of concurrency involved. Ignoring this would neglect a huge portion of their test cases.

async def test():

tasks = []

while True:

func = choose_function()

task: Task[None] = asyncio.create_task(func())

tasks.append(task)

await asyncio.sleep(5) # prevent a crazy tight loopCool, now they’re kicking off randomized, concurrent tasks every 5 seconds. Tests aren’t just about making software do things though – the whole point is to check what was done for correctness! They can’t forget to add validations, how else will they know that their API calls completed successfully? 3

async def test():

tasks = []

while True:

func = choose_function()

task: Task[None] = asyncio.create_task(func())

tasks.append(task)

task.add_done_callback(validate_return_value())

await asyncio.sleep(5)Why work so hard?

At last, our engineer’s test is starting to shape up… for their system with only 2 API calls… where we just assume that repeatedly calling these functions concurrently poses no issue. Chances are, anything you want to test involves way more complexity than that.

Maybe you see why we want to make this process a bit easier for our users. Our engineer could’ve finished much faster if they’d been able to simply provide API calls a() and b().

Since these best practices are fairly universal across all tests, we thought Antithesis could do most of the work for you. Like I mentioned above, we’ve been working on a new Test Composer to enable exactly that!

Now, you can make all the scheduling, randomizing and concurrency handling in our engineer’s final test template the Test Composer’s responsibility. Let’s keep using our example engineer’s system, but try writing their test using the Test Composer this time. Instead of handing us one big test, they’ll make an image with the following directory structure:

/opt/antithesis/test/v1/<my_test_dir>/

parallel_driver_a.py

parallel_driver_b.py

Where the contents of parallel_driver_a.py look something like this:

def test():

res = a()

validate_result(res)This directory structure indicates that the two .py files are test commands which can be scheduled with each other. As you can see, the composer expects the contents of the test directory to be executable scripts or binaries called “commands”. By prefixing the command names here with parallel_driver, we let the Test Composer know that these are tests that should run in parallel.

The Test Composer will then handle looping, picking a command to run at random (there’s a bit more logic involved in which command types may run with each other, and how often they’re run), and reaching different levels of parallelism. Perhaps we don’t actually want these requests to run in parallel. No worries! We can rename their prefixes to serial_driver, and now they’ll only ever run in series.

Test it out

The Test Composer helps to incorporate good practices even if you just want to port your existing integration tests to our system. Simply throwing all your previous tests into a single test violates the principles of encouraging randomness and “trying anything, sometimes” we talked about above. But by adding each test as a separate command, you don’t have to do much extra work, and the Test Composer will handle the principles for you.

Our composer works even better with tests written specifically for our system, and there’s much more to talk about – but I’ll let our docs handle that.

Test Composer is available to all our existing customers today. And if you’re not already a customer, do get in touch! We’ve just made it a little easier to get started.